Content migration has always been one of my favourite topics to explore and during my work practice, I had the opportunity to participate on several successful and not so successful migration projects 🙂. This post represents my view on a content migration strategy that is focused on efficiency, performance and added value for organisations and stakeholders.

So, what does Migration means for Enterprise content Management?

A content migration refers to moving digital content from one platform to another, usually during the replacement, upgrade or infrastructural changes of a digital content services platform. No migration scenario is exactly the same as the next, because there are so many different reasons that may lead organisations to consolidate and migrate data. Every company has its own needs and will face its own unique set of challenges.

Organisations struggling with high cost, lack of features, content silos and an aging architecture that limit flexibility, agility and the ability to provide new functionality to stakeholders in the organisation decide on rethinking their Content Services Strategy. Most companies have their content distributed across different sources and it’s common to execute migration projects where customers aim to centralize digital content from legacy sources onto Alfresco, aiming for a single/centralised repository for company content.

Migration Motivations

The main advantages for migrating your of enterprise digital content:

-

- Allowing fully informed, hence better business decisions

- Decreasing cost of running many different applications and content repositories

- Mitigating risk by having centralised content

- Consolidating information for quick and easy access

- Managing permission rights, warranty processes, etc.

- Tracking trends, customer feedback, financial audits

- Meet compliancy and discovery rules mandated by the government and/or corporate policies

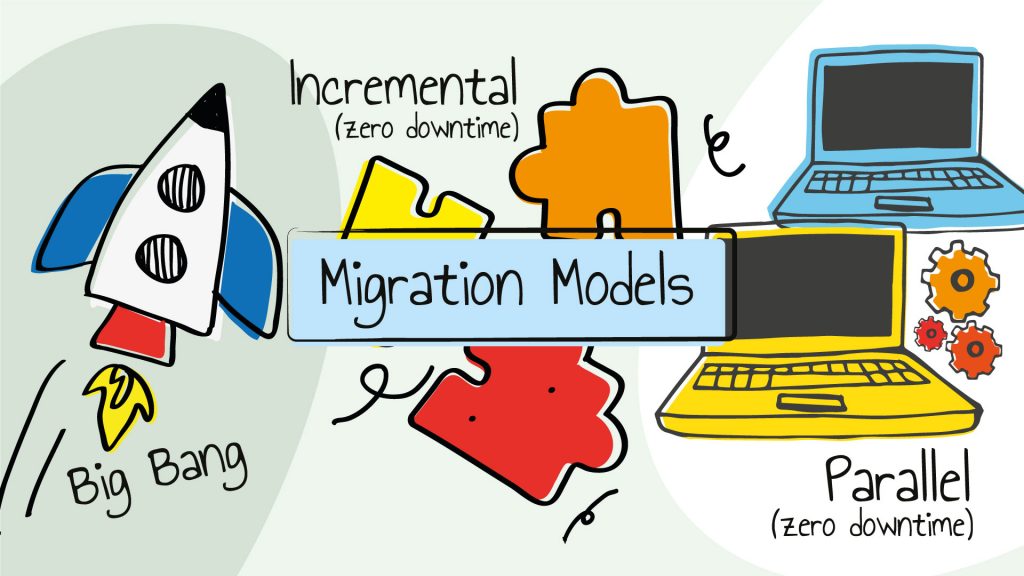

Migration Models

There are three main strategic models for migrating content: big bang, parallel or incremental. Each migration method has its own advantages and the one chosen should be weighed against specific operational variables. It very important to check what service level agreements exist in the organisation to choose the appropriate migration model.

Big Bang

In this model, the migration happens all at once, over a pre-defined interval of time. The downside to this method is if delays occur, or if the new system doesn’t work as planned, there may be negative impact to the business.

Parallel (zero downtime)

In this model both old and new systems run together. When the content is loaded and thoroughly tested a cutover is made. The advantage here is that there is little chances of problems and no service disruption as the systems will run together until the new one is fully tested and accepted. The downside is that synchronisation techniques must be used to keep both systems in sync while the new system is being tested.

Incremental (zero downtime)

This model allows for a gradual transition to the new system at one time. The advantages are that you can start getting results sooner; it’s easier to test smaller, incremental datasets. This requires techniques to handle the delta content that was migrated but changed on the source during the migration procedures.

I’ve never done a successful Big Bang migration project! In my opinion its only applicable to relatively small datasets that can guarantee that the migration timeline is very short. For more complex and realistic scenarios that we see on big organisations, the incremental migration model is more common. It has its set of challenges, but it has proven to be a safe choice allowing for quicker quality outcomes as the new system can be tester earlier with smaller datasets.

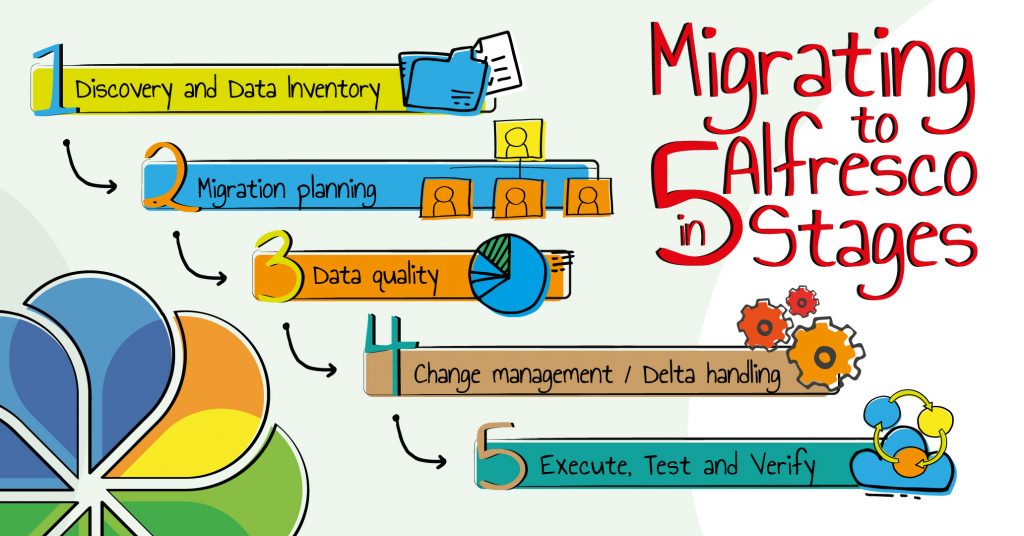

Migrating to Alfresco in 5 Stages

The migration process should be completed as seamlessly as possible with minimal service disruption and a change management approach, which minimizes impact on the business and provides the right level of support to users, so they are familiar with the new application.

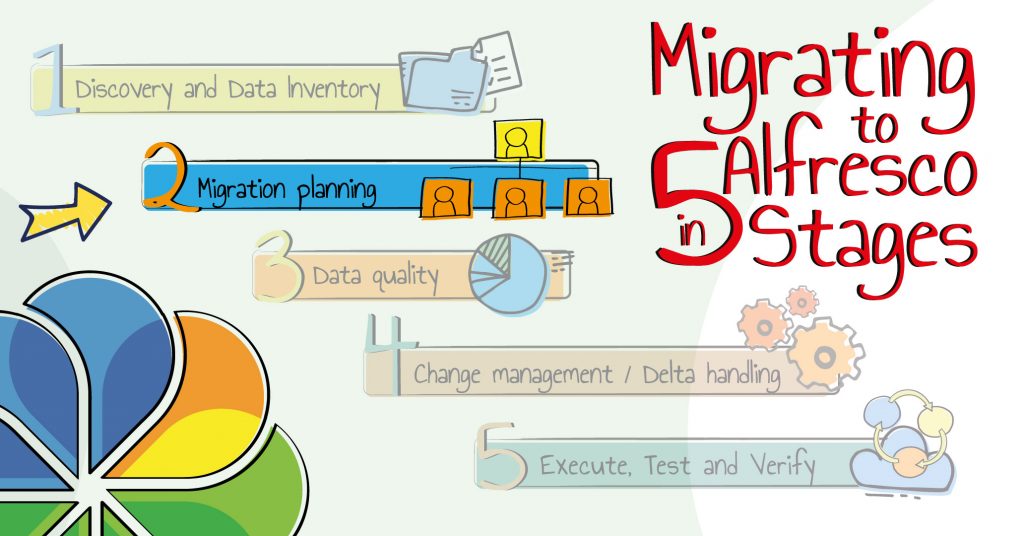

The 5 key stages of a successful content migration are:

Orchestrating those foundations makes possible for customers to implement a controlled and reliable process to migrate from their legacy content into Alfresco.

Building Quality outcomes in stages

The migration project should be designed to achieve specific goals, including analysis, mapping, high-level design, detailed-design, configuration, testing, deployment and other critical objectives. These objectives are reached in carefully ordered stages, so as to build quality outcomes upon the foundation of the preceding steps.

Stage 1 – Discovery and Data Inventory

The discover stage of our methodology is comprised of a series of workshops between the customer technical and functional teams that have insight of the legacy content system and will help to identify the content location, volumes and migration targets. This stage addresses high-level business decisions surrounding the original source content being migrated to the new source target repository. Though content migration is in itself a technical process, it is essentially a business issue. It is recommended to engage a knowledgeable consultant to provide guidance through this discovery process. An experienced expert will be worth the expenditure as his services and will mitigate project risk by helping your team be aware of, and plan for, any and all eventualities. Collaborative strategy sessions must occur between all business users, stakeholders, and IT staff so that project goals are attained.

Data Inventory

Before migrating content, we carry out a content inventory that lists every single type of content on your existing repositories and produce a report with information about where and how it is stored, and how you will treat this in the migration. Implementing a content inventory is a key preliminary step in taking control of your enterprise’s existing content, allowing you to launch an effective content strategy and digital transformation. A content inventory is a quantitative and qualitative content assessment that provides a critical baseline for all your content analysis and content migration.

It’s important to clearly define the scope of the inventory, or how many systems/repositories you will include. For example, if you are considering an enterprise-wide content inventory, the best practice is to break it down into manageable chunks. Yes, you may need to overhaul several internal repositories and content sources, but we recommend approaching that problem in stages.

Data Inventory Goals

Define the goal of your inventory. You should be able to state your objectives, or the information you want the inventory to generate: “At the end of this inventory, I need to know how many documents I’m dealing with, roughly the types of content they contain and what shape they str in.” A very important goal of the content inventory is to identify content that can be decommissioned or archived corresponding to content you don’t really need to migrate. Identifying content governance requirements and stakeholder relations is also a common goal. On our projects we use content inventory tools that help us keep a shared location of the inventory. This data should be accessible to all members of the migration project, this will help guarantee that no content is left behind!

We normally use a crawler plus some context scenarios, or whatever combination of tools you chose to use. The goal is to have the results stored on a format that can be easily understood, analysed and processed. A very good solution for crawling is Apache ManifoldCF as it has connects to most of the existing content repositories. During the migration project we can create an output connector to generate our content Inventory. The diagram below shows some of the connectors currently present in Apache ManifoldCF.

Source Content Analisys

After collecting and generating the content inventory report you should analyse its contents, ultimately trying to identify relationships, paths, and patterns in content that can help you on the plan stage. It’s important to move from quantitative to qualitative content enabling the team to focus only on content that have quality and relevance for the enterprise.

For every piece of content, you should be able to answer these questions:

-

- Is the content necessary, or does it need to be deleted or archived?

- Will the remaining content migrate or stay behind (and if stays behind, will it be deleted or archived)?

- How much clean up and revision does the migrating content require? Does the content have any special technical requirements that might involve custom coding or other accommodations?

- Is metadata being used to consistently to categorize or “tag” information? Consistent use of metadata tagging means more opportunity to automate and have technologies push and pull content across business processes. Information that is of good, reliable quality control and consistently structured will be found, read and re-used more frequently.

Remember, automated migration processes often require a manual clean up phase afterwards.

Source Content Security Audit

Organizations must understand what information they create and hold. What level of sensitivity is your information? How many categories of confidentiality or secrecy exist? Are the rules for each category of information clearly understood by your employees and contractors? Are they trained on appropriate handling, sharing and disposal policies?

From a technical perspective, ensure an application cannot become an entry way into other corporate systems or lead to a denial of service attack. How does information flow through firewalls, where are database calls?

Stage 1 Collaterals

The collaterals of the discover stage are a set of documents containing insights of the existing content, the different content types and their locations providing a clear understanding of the current system usage scenarios, data volumes and migration targets to support the next stage, the migration plan. The documents created at the discovery stage are:

-

- Content Inventory Report

- Content Inventory Analysis

- Content Security Audit

- Content Integrations Inventory

Stage 2 – Migration Planning

A good migration plan involves the definition of the Information architecture, the content strategy for digital transformation and it aims to develop an improved user experience while interacting with enterprise content. Remember each migration is unique, and will likely require an individual mix of automated tools and manual human resources.

No company should embark on a project for moving their digital content without a reliable plan and a well defined enterprise data migration strategy. There are some key principles that can be applied in any enterprise data migration project.

Organisation Expectations

Perhaps the most important part of the strategic planning process is to interview the stakeholders within the organisation, including any departments, teams and individuals who will be involved in or impacted by the migration. Not only must management be aware of their needs and capabilities as they relate to the migration, but they also must be briefed in advance of the process so that they can make any concerns known. Review and refine the target based on the source information to identify gaps that exist or enhancements needed.

Identifying migration Assets

A similar accounting must be made for any assets involved in the migration, such as information architecture, content sources, business processes, regulated content and standards for compliance, formatting and templates. Migrations must be evaluated and planned based on a number of factors. The needs of the business and the quantity and state of the business, source documents and metadata are major components to take into account. Once you gather information on your content, you need to analyze its strengths, weaknesses, and develop an overall strategy. Remember, when you are migrating your content you are also shifting your digital content culture. In the migration plan stage, we set up a core content migration team composed of content managers and IT, and we add to that team satellite support from individual business units.

Care about the Details

There are also many complex elements within these different areas of consideration, which must all be accounted for when planning a migration. These include the people who will be using the new content services solution, the different source repositories versions being migrated and any associated dependencies. Understanding content complexities, relationships, quality, and volume is vital for a successful planning of the migration.

Data Security

Migration planners must consider the security of the data, establish whether there is a need for external links and other types of data and determine the structure of references and data dictionaries (if applicable), as well as the types of forms, templates, objects and document indices personnel will be utilizing.

Risk Mitigation

A risk analysis should include the building and testing of a prototype system. Representative data will help define performance requirements and serve as a benchmark during testing for software/hardware compatibility, downtime, and budgetary projections. Running the migration program completely against a representative set of the content in a lab environment before running it live represents a crucial step to mitigate the migration risks. When the risks have been investigated, results should be signed off on by the migration team. Keep a copy of the original content for testing and training purposes in the event part of the migration does not work and you need to go back. Note that the migrated content has been restructured for the new system and context will have changed, hence it will be difficult to compare. Have ready a contingency plan that can be applied in case of the unlikely event of things going really wrong. This will help the business to feel confident moving forward with the content migration.

During the planning stage it’s important to consider existing document handling and archiving procedures as those may need to change. As a general rule, it’s important to get familiarised yourself with Alfresco and understand how it handles everything from permissions, relationships, content locations, storage possibilities, archiving and optional modules (for example records management).

When you migrate your data to Alfresco, you don’t necessarily need to migrate the actual binary data, or binary objects, depending on where they reside and your migration strategy. You can migrate existing data in your system that points to those objects and establish the structure that you use for finding and retrieving those objects in Alfresco. When considering migrations to the cloud, the market has offerings that allow a fast transfer of binary data, in its current status, into the cloud-provider infrastructures. Aws for example, offers the Snowball service but there are other options.

Permission Mapping

Every content repository normally has its own set of users, groups, permissions and acls. On a migration project, the target system is normally able to synchronize the authorities (users, groups and memberships) that are currently and will be used for authentication and authorization purposes. Alfresco allows for synchronization of users and groups from the company directory system (Active Directory, Ldap, …).

Some projects require that we also migrate the permissions for the existing content, this is normally done by managing a mapping strategy that will indicate how permissions on the source legacy systems will map to the Alfresco permission model.

Stage 2 – Collaterals

The documents created at the discovery stage are:

-

- Migration Plan

- Migration Infrastructure

- Migration ToolKit

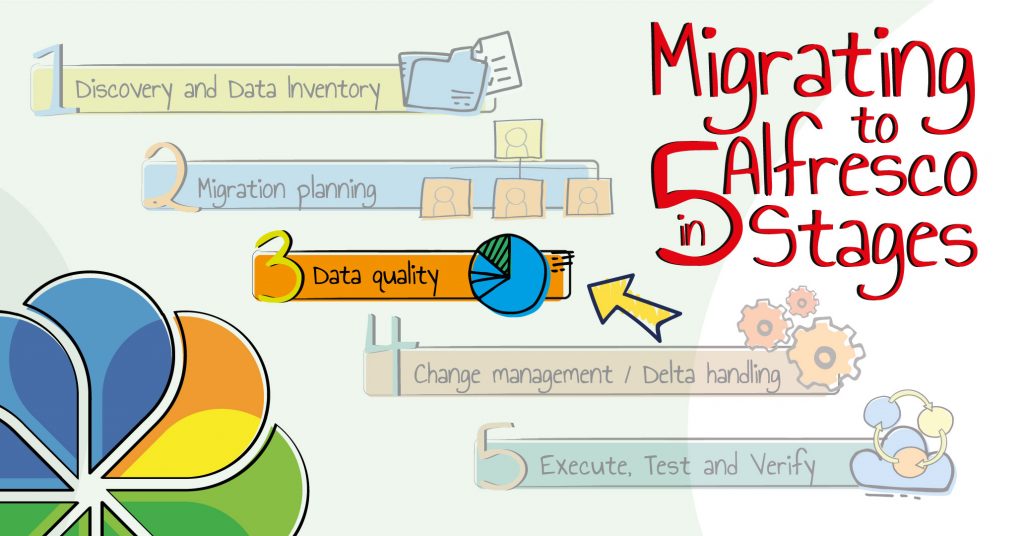

Stage 3 – Data Quality

The challenges of a content migration are compared to the challenges faced when moving houses. We need to figure out what is really important and what isn’t – and then we can threw out or archive what we don’t really need. We may also consider to change the setup our new house.

Enterprise digital content can be seen as an organism that can change, adapt and evolve in the scope of a migration project. As a result of possible changes to the existing data model, and the way Alfresco Content services products work, some of your legacy data may not migrated one-for-one, because it does not directly map. This represents an opportunity for implement a clever digital transformation to the way the organisation interacts with their digital content. (auto-tagging, content categorisation, …) allowing for increase efficiency and maximize the value of the stakeholders investment.

The simplest migration scenario is one where we are only looking to migrate the metadata and the binary elements of the data. In this case we execute a mapping plan from the source metadata fields into the target system. Normally, on this scenarios we take the opportunity to do some data normalization or enrichment. That is applicable assuming we’re working with highly structured data. Structured data has characteristics that can be programmed against but not all sources of data in a migration project contain structured data.

Unstructured data is often the result of years of organic content growth inside the enterprise, with multiple authors, multiple authoring environments, and no uniformity resulting in a lack of a common structure. In this cases, we need to be more creative and plan for an higher degree orchestration to allow un-structured data to become correctly structured and enriched into a unified digital content structure.

The current Alfresco technology offers the possibility to leverage very cheap (slower access) binary storage (i.e – Amazon Glacier) via its Glacier connector. This new functionality offers the possibility to archive less used content at very reduced costs.

The data quality stage should ideally include a content clean-up plan. Some de-duplication projects i know about, have reduced the size of their repository by 50%, leading to huge savings in storage and compute power. In our days, enterprises are managing large amounts of data with a complex nature and in most cases we find a number of duplicates (both in files and in file folders) as well as ROT (redundant, obsolete and trivial) data. Defining a content clean-up plan helps to reduce the size and increase the relevancy of the content on the target repository.

No company wants to take pollution from the old system to the new environment. It is, therefore, necessary to clean up by deduplication, classification and restructuring. Content owners must be encouraged to sift and sort through information, removing outdated or redundant content, thus eliminating the volume of information to be moved.

Stage 3 – Collaterals

The documents created at the discovery stage are:

-

- Revisited Content Inventory (i.e. Content cleanup plan/de-duplication)

- Content Enhancement Plan (metadata mapping, auto-classification, GDPR, AI,…)

- Content Archiving Plan

Stage 4 – Change Management

Change management stage encapsulates the definition of techniques to handle delta content that was created by using the legacy systems during the migration process.

Once the execution cycles are complete, we must assure we have mechanisms(rescan) that can identify any new items or items that were not migrated previously. This is called delta content migration, a feature that is mainly used in staged migrations.

Delta content

Any content that is added, deleted, or modified during the defined delta period. We can normally identify this content. Content freeze cycles can help to improve the change management process.

Delta content migration

The process that eliminates the need for a long content freeze cycles. It implements processes that identify content that has been created, edited, or deleted between the initial extract and the second extract at the close of the period, done when the data migration is completed. A short freeze is necessary when delta content is extracted from the legacy system and provided to the vendor to add to the target system, but it is a considerably shorter window.

Identifying delta content is normally done by inspecting the system modification date (update), system creation date(create) and system archive date(delete) attributes. Depending on the genesis of the source repositories those attributes may have different names, but experience tells us that they are normally available. In a nutshell, “we must provide mechanisms to distinguish what has been added/deleted/modified within the delta timeframe“.

Advantages of a delta migration include:

-

- Less time in the content freeze period

- More time for content validation and clean up

- The ability to incrementally receive content into the target system

Content Freeze

Content freeze cycles exist to avoid people updating content on the old repositories when you have already migrated to the new. Likewise, freezing content during testing and proofing cycles on the new repository is recommended.

As with any process, there is a downside. When working with a delta migration, the content that has been deleted in the delta period will have to be manually tracked and deleted as part of the final content clean up.

Stage 4 – Collaterals

-

- Delta Content Migration Plan

- Freeze Cycles Definitions

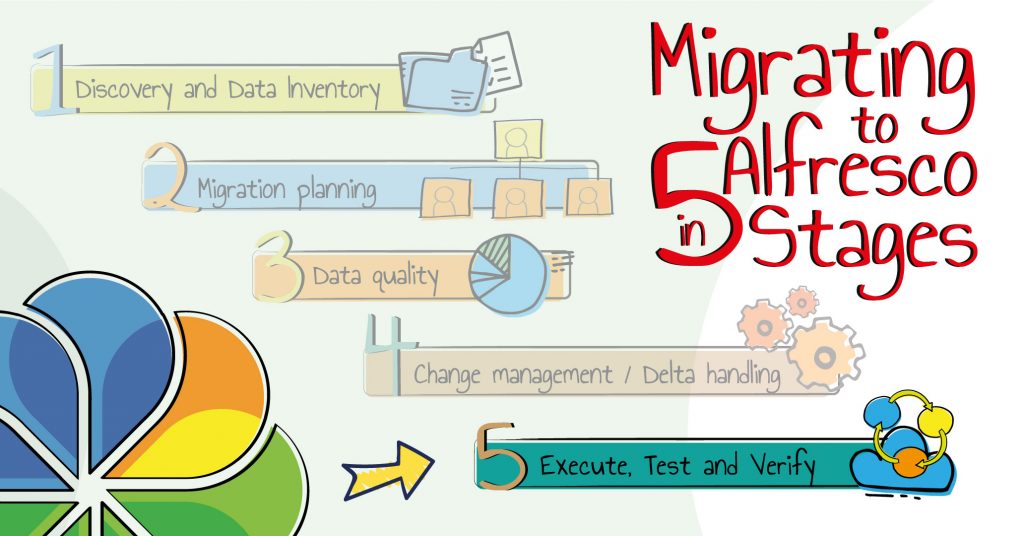

Stage 5 -Execute Test and Verify

This stage is responsible for the execution of the plans defined on the previous stages. It encapsulates a series of execution cycles, first on a QA environment with a reduced set of data to validate the migration plans and subsequently on Production.

Successfully migrating data without compromising its integrity and accessibility, and effectively managing that data after its migration, is essential to the success of a migration project. The validation stage purpose is to define mechanisms that guarantee the quality of the migrated data. The migration project is not finished until confirmation that source content has been successfully moved into the new target. Build checklists to make content movement and integrity easier to check and track. Migration expectations against actual results should be documented in a report to validate migration completion and quality.

A good audit will involve conversations with end users to ensure everybody is happy with their new single view of information. These are the people with daily hands-on use and knowledge of the new model, and often the true “test” of success is user acceptance.

-

- Content review by subject matter experts and business units.

- Content review by strategists and editors, in order to translate SME feedback into consumable content that fits the overall content strategy

- Testing on all sorts of different interfaces, to establish whether your content displays and shows correctly in all interfaces.

Stage 5 – Collaterals

The documents created at the execute stage are:

-

- Content Migration Report (Contains information about the content that was migrated on the execution stage and its status on the final destination)

- Content Validation Report (contains validation data from SME on areas such as metadata mapping, data quality, permissions and data enrichment)

Housekeeping

A final step is to save and archive the migration toolkit and scripts. Content migration is often a one- time exercise; however, with the right tools, the protocols, mappings, and scripts can be reused in future projects within the organisation. A documented report of the migration process will serve as a repeatable reference guide.

Conclusion

Enterprise content migration can be a painful and risky process, or even an outright failure, for a number of reasons. Meticulous planning and a good methodology is the best immunisation against these risks. For businesses looking to change, upgrade or consolidate their content centric solution with Alfresco, i hope this post is able to point out the benefits of an effective migration strategy.

Please send your comments, your feedback is very important for me.