Having a sticker indicating the usage of AI for some tasks doesn’t mean that the task will be completed successfully: there are many ways to solve a problem. So, if you have a problem to solve with your data, why not use the best set of tools to solve it? That’s the goal of Texter Machine Learning (TML).

Introduction

Usually, Enterprise Content Management (ECM) systems have different kinds of information. In order to automate processes, there’s the need to have a set of tools, working together, to handle those kinds of information.

Texter Machine Learning (TML) is an aggregator of Machine Learning (ML) engines: instead of relying on a single ML engine, it’s possible to aggregate the results of several engines, to improve overall results.

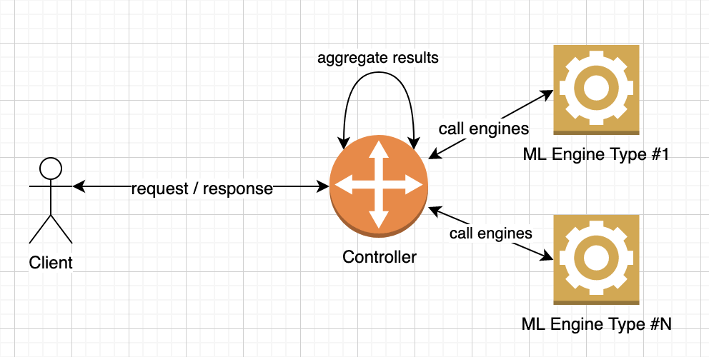

Architecture

In order to process a request from a client, TML will contact one or more ML engines and aggregates their results, in order to provide the best results to the client:

Controller

Controller receives the requests and orchestrates on which and how engines should be called: most of the business logic could be implemented in this controller.

Because we have a well-defined API, it’s possible to replace the controller with another one, without stopping the client or changing its code.

There are several examples of controllers already available but it’s possible to create a new controller easily, using a maven archetype project.

We rely on SpringBoot capabilities to provide the ground foundation for a micro-service environment, if necessary.

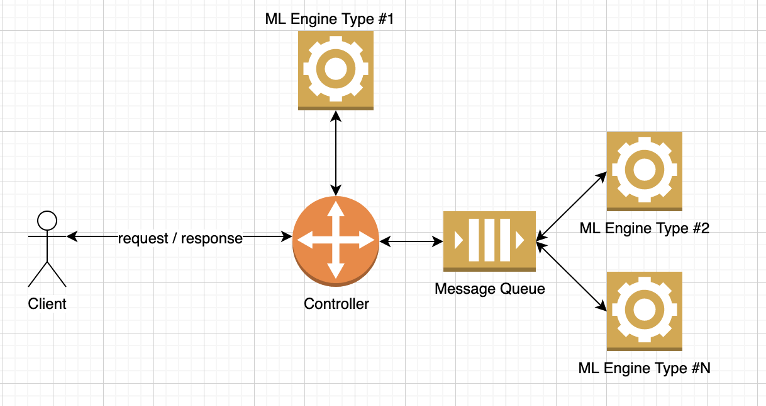

Adapters

To interact with ML Engines, TML provides the notion of Adapter: a standard way to communicate with them.

With those adapters, it’s possible to use the engine directly on the Controller or distribute them on a network, using message queues.

Once again, we’re using SpringBoot in order to provide a micro-service architecture, to scale the engines according to business needs.

ML Engines – Available ML Engines

Because engines will do the hard work, there’s also the need to configure/train those engines in order to provide the best results over time.

TML makes available, right from the start, the following ML engines:

- Natural Linguistic Processing (NLP)

- Computer Vision

- Optical Character Recognition (OCR)

ML Engines – What about other ML Engines?

Because TML has a standard adapter approach, once an adapter is created for a new ML engine, it can be deployed on a network and make it scale, according to business needs.

Also, Texter has access to years of experience of AI modelling, building engines for specific needs, like:

- License plate recognition

- Data extraction and analysis of technical documents (technical drawings)

- Content auto-classification

- Re-branding (automatic replacement of logos, e.g.)

Having experience creating new ML engines and a product that can deploy it at scale, it’s a key factor for success.

Inputs and Outputs

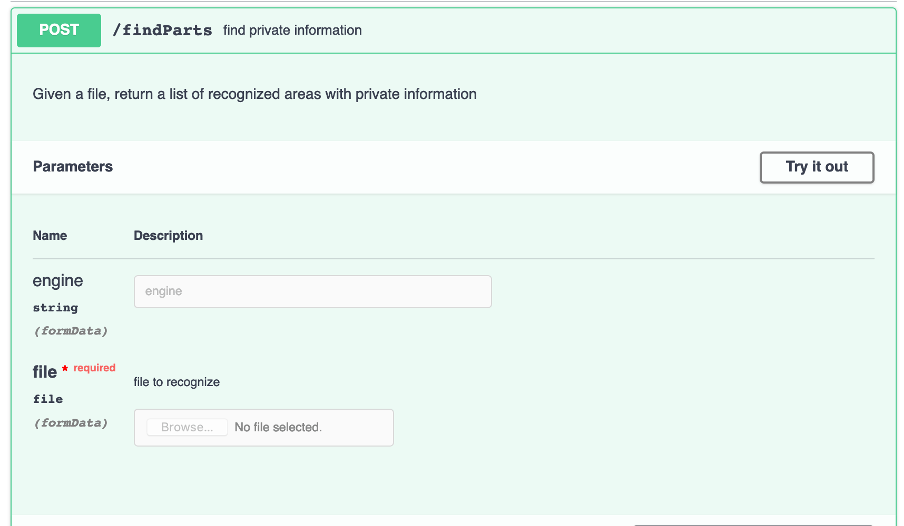

TML receives the file to be analyzed and the wanted engine(s) that should be used. If no engine is provided, the default one defined in the Controller is going to be used. To specify more than one engine, just use a comma separated list of engines.

If there’s no error, the output is a JSON document, with information about recognized data and its location.

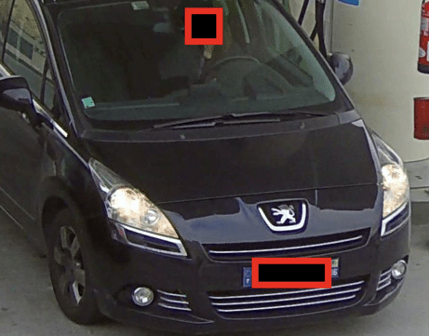

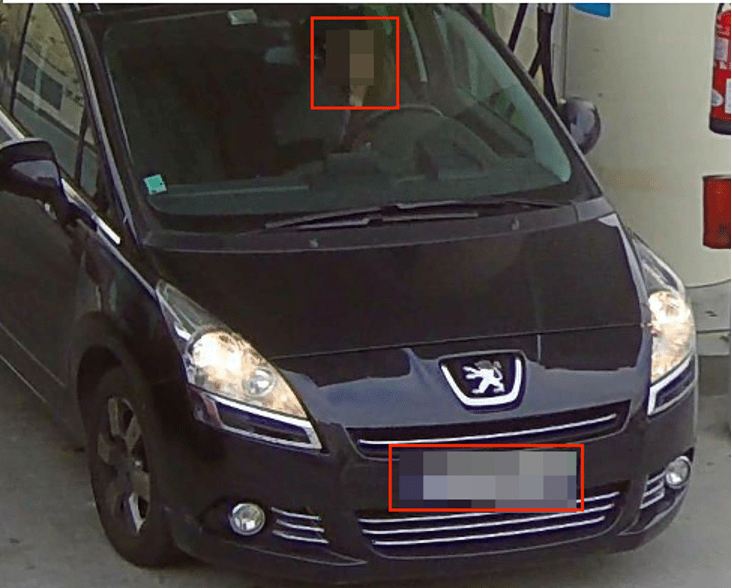

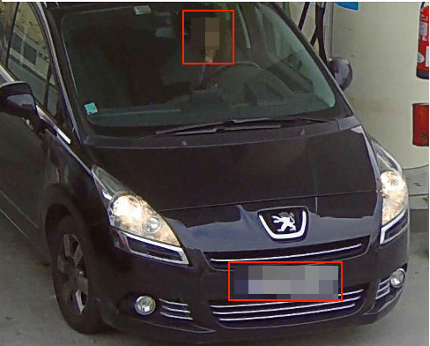

Let’s see an example of TML’s capabilities. Given the following picture (exactly because of GDPR, we can’t publish PII information without consent. The PII information was pixelated and surrounded by a red box):

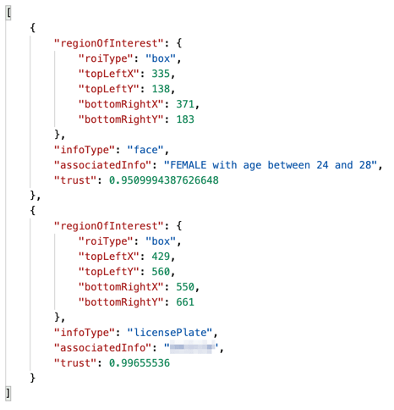

and indicating that we’re interested in licensePlate and WatsonFD ML Engines, TML returns the following result:

As we can see, “regionOfInterest” reports the location of the recognized information, “infoType” returns the type of information, “associatedInfo” shows the information itself and “trust”, a metric between 0 and 1 telling us how confident the recognized information is.

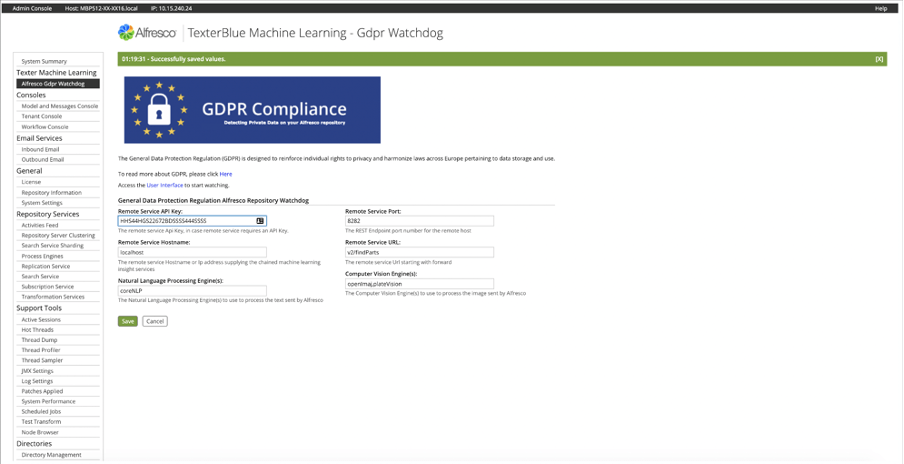

TML on Alfresco – GDPR Watchdog

Having such a powerful tool at your disposal, it should be illegal not to integrate with Alfresco, a top-level ECM provider.

Here comes GDPR Watchdog, a component which interacts with TML, providing the ability to reconfigure TML’s controller behaviour at runtime, without the need to restart Alfresco itself.

Goal

The main objective of GDPR Watchdog is to provide an independent service within Alfresco to detect and anonymize Personally Identifiable Information (PII), either in pictures, texts or documents.

These actions are a result of GDPR’s Right to Forget: organizations around the world must not only protect personal data but also build mechanisms to forget personal data on request from individuals.

How it works – a bird’s eye view

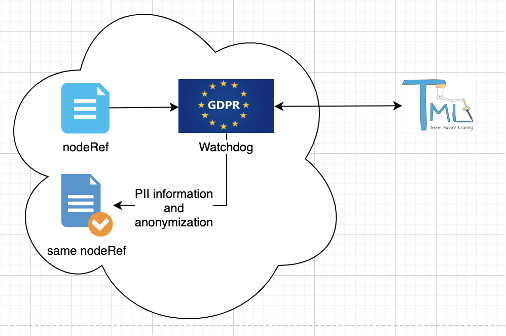

GDPR Watchdog makes the connection between Alfresco and TML, like the following diagram:

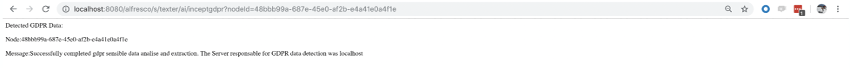

Given one or more nodeRef, GDPR Watchdog will contact TML to obtain the PII information and its location within the document. With that information, it’s now possible to add the PII information to the document (using an aspect) and redact the document (anonymized content).

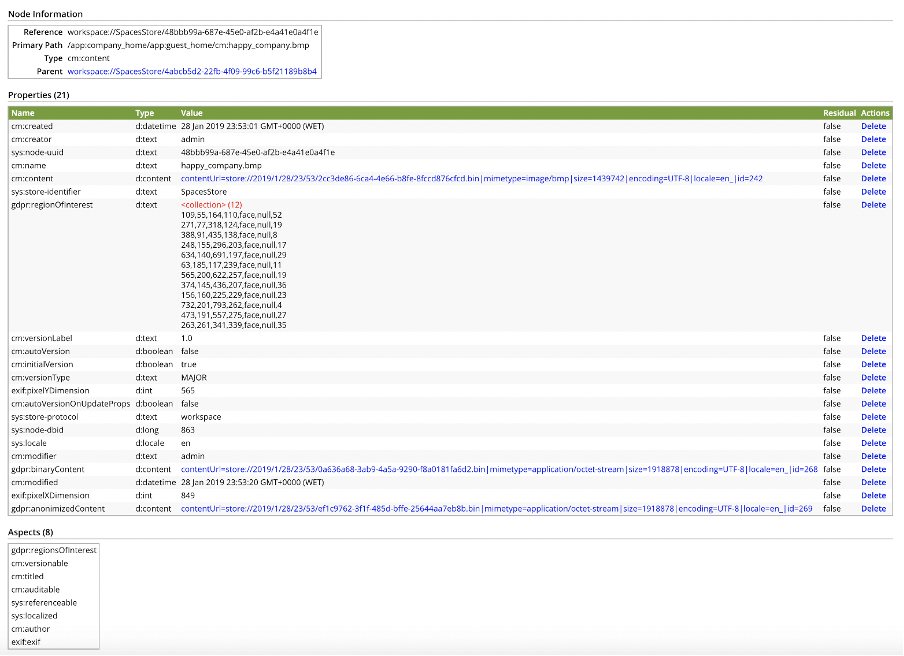

In this example, it’s possible to see the output of GDPR watchdog. Original NodeRef will be modified in order to gain:

- location of the PII information (gdpr:regionOfInterest)

- a binary content indicating where the PII information is (gdpr:binaryContent)

- another binary content with the content anonymized (gdpr:anonimizedContent).

How it works – nuts and bolts view

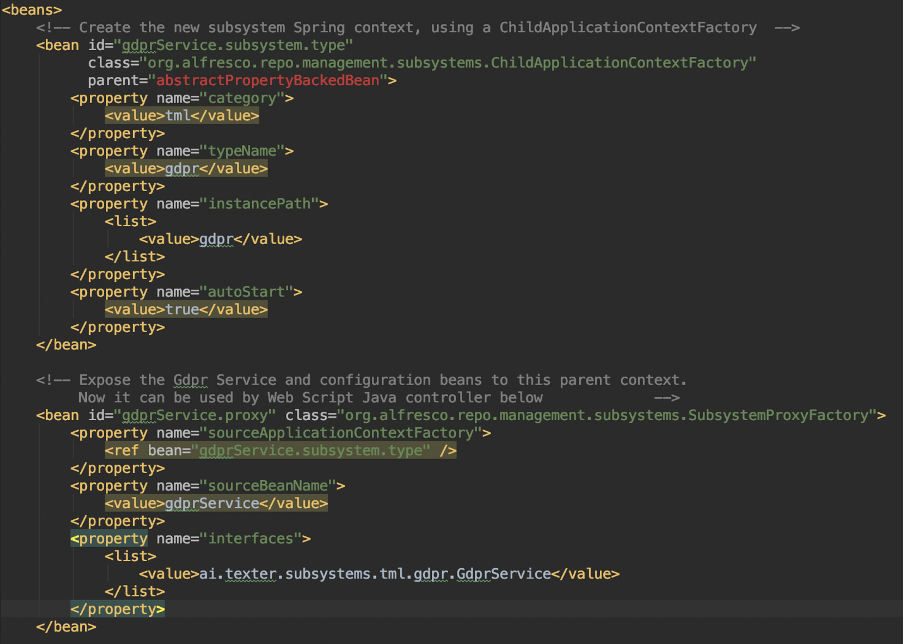

GDPR Watchdog, as a well-behaved component within Alfresco:

- runs on an isolated Spring application context

- can be started/stopped independent of the repo

- can be configured in runtime via JMX

Subsystem

To enable this behaviour, GDPR subsystem uses ChildApplicationContextFactory and GDPR service is exposed to Alfresco Context using Subsyste.

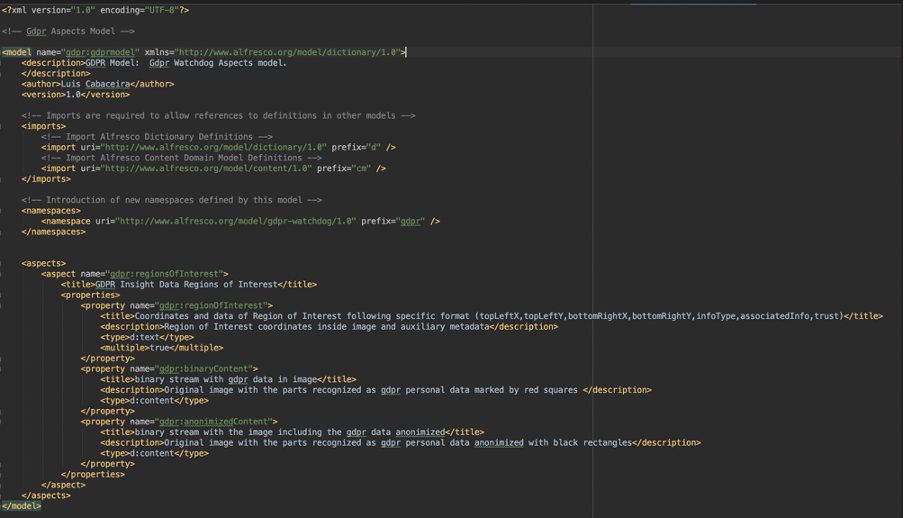

Content-model

Because the output of the GDPR Watchdog is an updated node, a content-model needs to be defined, with the aspects/properties described in earlier examples.

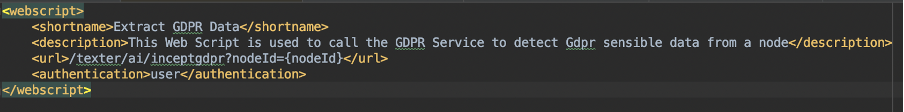

Webscript

To provide the ability to process just one nodeId, the user can access a webscript:

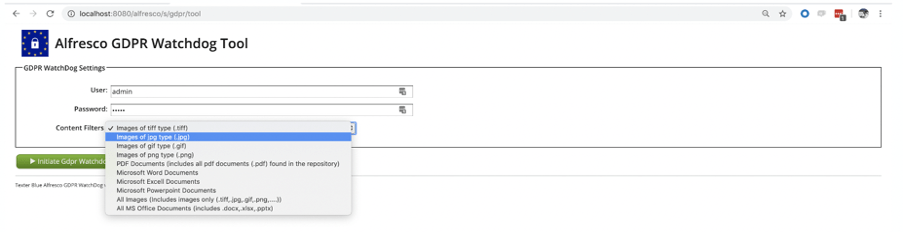

Usage

To detect PII information, identify it and anonymize it, it’s possible to use a webscript (for a single node) or a crawler (for the whole repository), where it’s possible to transverse by mimetype.

Configuration

It’s possible to configure GDPR Watchdog without restarting Alfresco. It’s even possible to configure which logic we want to run on TML, according to type of documents.

Webscript for individual nodeId processing

To execute the Watchdog on a single document, it’s possible to call a webscript with the wanted nodeId.

Repository crawler for a specific mime-type

It’s even possible to transverse the repository, filtered by mime-type and execute the watchdog on documents found.

Example

Using the same example from TML, the original node from Alfresco, after being processed by GDPR Watchdog, will get TML’s output on a property, as well two binary contents: one with red boxes indicating where the PII information is and another one with anonymized content.

Original picture

Anonymized content